Photonics for AI hardware acceleration is the topic of this blog article.

Introduction: Integrated Photonics for AI Hardware Acceleration

Artificial intelligence (AI) models are growing exponentially in complexity and data demands. Training large neural networks like GPT and image classifiers requires massive computational resources, often consuming megawatts of power in data centers. Traditional electronic processors, even specialized GPUs, face physical and thermal bottlenecks.

Integrated photonics, a technology that manipulates light on a chip to perform computations at the speed of photons, is a growing field of interest in various applications. By integrating optical circuits on silicon or other photonic platforms, engineers are pioneering a new class of photonic AI accelerators capable of executing machine learning workloads faster and more efficiently than ever before.

Why Electronics Is Hitting the Wall

For decades, Moore’s Law and Dennard scaling drove exponential improvements in transistor density and efficiency. However, as transistors approach atomic scales, further miniaturization brings diminishing returns. Data transfer, not computation, has now become the limiting factor.

Key bottlenecks in electronic AI hardware:

Memory bandwidth: Moving data between GPU and memory dominates energy costs.

Heat dissipation: Power-hungry data centers generate enormous thermal loads.

Latency: Electrical interconnects limit communication between processing nodes.

This is where photonics offers a transformative advantage.

How Photonic Accelerators Work

Photonic AI accelerators use light instead of electrons to perform computations. Optical signals can be transmitted, multiplied, and interfered directly on-chip using modulators, waveguides, and detectors.

At the heart of many architectures lies the Mach–Zehnder interferometer (MZI), which performs linear matrix multiplication, the fundamental operation in neural networks. These devices exploit the interference of light to encode weights and perform high-speed multiplication nearly instantaneously.

| Core Component | Function in AI Accelerator | Photonics Implementation |

|---|---|---|

| Waveguide | Routes optical signals | Silicon or silicon nitride channels |

| Modulator | Encodes input signals | Electro-optic or thermo-optic phase shifters |

| Detector | Converts optical signals to electrical | Photodiodes |

| Interferometer Network | Executes matrix multiplication | Mach–Zehnder arrays |

| Laser Source | Provides optical carrier | On-chip or fiber-coupled laser diode |

This approach drastically reduces latency while increasing computational throughput and energy efficiency. Since light signals don’t interact the same way electrons do, photonic accelerators avoid resistive losses and crosstalk, enabling parallelized, ultrafast processing.

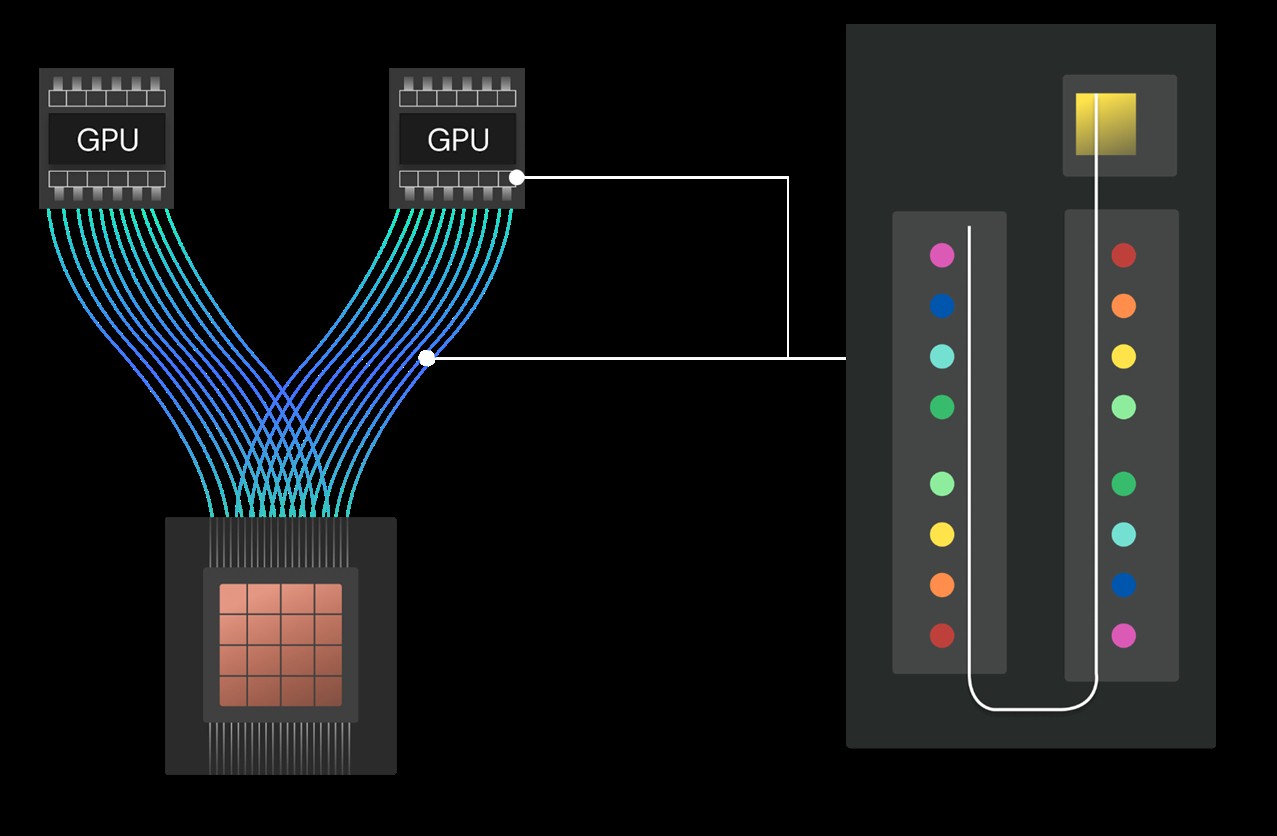

Optical enhancement of AI interconnect bandwidth (courtesy of The Next Platform)

Photonics for AI Hardware Acceleration: Recent Breakthroughs

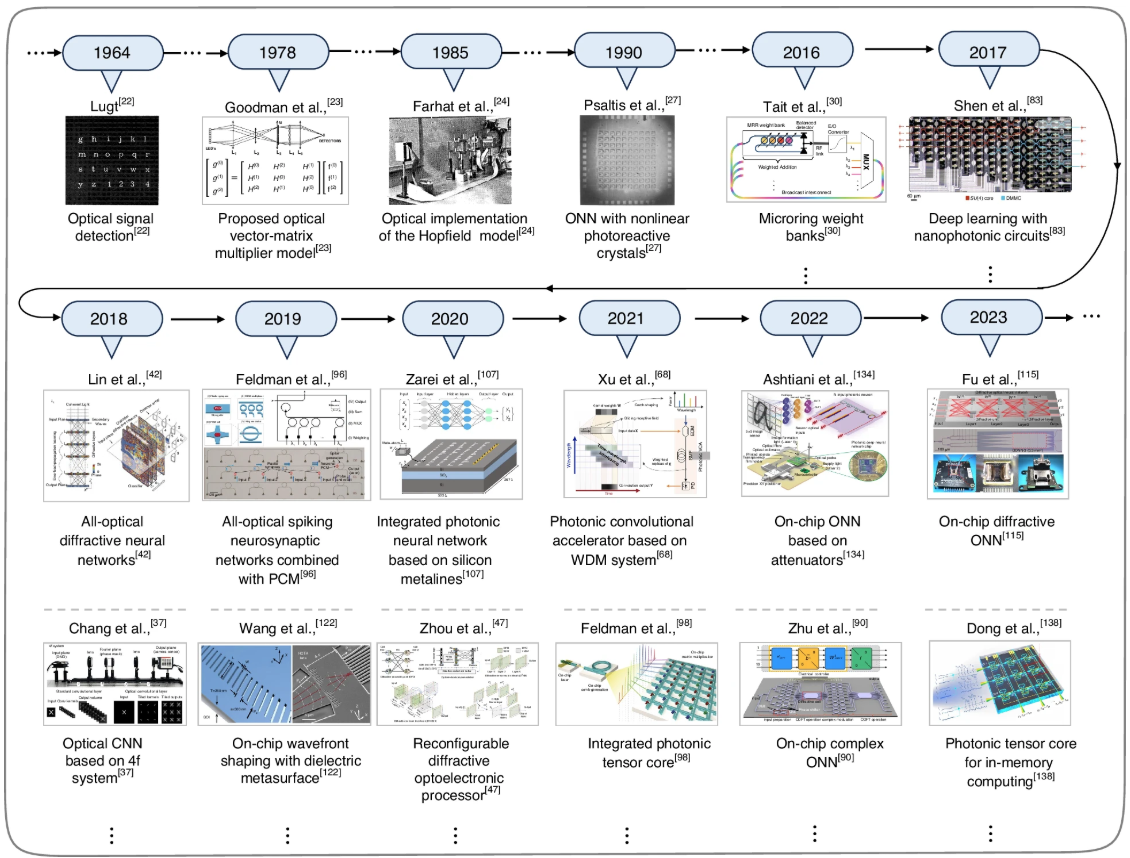

The last five years have seen remarkable breakthroughs in the development and commercialization of photonic AI accelerators. These systems are rapidly evolving from research prototypes into real products targeting datacenter inference, edge computing, and even mobile platforms. Several startups and academic teams are shaping the landscape by pushing the boundaries of optical matrix computation, chip integration, and system-level scalability.

| Company / Lab | Technology Focus | Key Advancement |

|---|---|---|

| Lightmatter (USA) | Silicon photonic processors | Developed the Envise chip, an optical AI accelerator that performs matrix multiplications using MZI arrays, offering up to 10× energy efficiency over GPUs. |

| Celestial AI (USA) | Optical interconnect fabrics | Introduced Photonic Fabric, a chip-to-chip interconnect using light paths to replace electrical traces, reducing data movement power by over 80%. |

| Lightelligence (USA) | Integrated photonic computing | Demonstrated HERMES, a 64×64 MZI array-based inference engine for real-time neural network processing. |

| Luminous Computing (USA) | Hybrid photonic–electronic architectures | Building an optical training platform that combines photonic matrix multipliers with conventional electronic controllers for end-to-end training acceleration. |

| MIT (PhotonAI Project) | Analog photonic neural networks | Created photonic tensor cores integrated with nonlinear optical activations, achieving inference speeds of tens of TOPS/W. |

| PsiQuantum (USA/UK) | Quantum photonic processing | Leveraging single-photon systems for probabilistic and quantum-enhanced machine learning models. |

| Imperial College London | Reservoir computing with photonics | Explored time-multiplexed optical networks to emulate recurrent neural architectures without electronic feedback. |

1. Silicon Photonics Meets Deep Learning

One of the biggest leaps came from silicon photonics integration using CMOS-compatible manufacturing to produce photonic circuits at scale. Companies like Lightmatter and Lightelligence have successfully fabricated large-scale Mach–Zehnder interferometer (MZI) meshes directly on silicon substrates, combining high performance with cost efficiency.

These optical chips can perform vector–matrix multiplications (VMMs), a core deep learning operation natively in the optical domain. Because light waves naturally perform interference, VMMs can be completed almost instantaneously, with only minor energy used for modulation and detection.

2. Optical Interconnects for AI Clusters

A critical limitation in today’s AI systems is not computation itself, but data movement. Optical interconnects provide a way to transfer data between chips or racks using light, cutting energy consumption by orders of magnitude.

Celestial AI’s Photonic Fabric allows data routing via wavelength-encoded optical paths, eliminating the need for conventional copper interposers. This innovation aligns well with Nvidia’s and AMD’s push for chiplet-based architectures making optical links essential for future scalability.

3. Hybrid Photonic–Electronic Systems

Fully optical computation remains a challenge because nonlinear functions (like ReLU) are hard to perform purely in optics. Companies such as Luminous Computing and Lightelligence are thus developing hybrid systems, where optical modules handle the linear operations while CMOS handles nonlinearity and control logic.

This hybrid approach leverages the best of both domains:

Photonics for ultra-fast, parallelizable matrix multiplication

Electronics for nonlinear activation and precision control

The result: reduced latency, minimized power draw, and scalable architectures suitable for both inference and training.

4. Analog Optical Neural Networks (ONNs)

Researchers at MIT, Princeton, and EPFL are pioneering analog optical neural networks, where optical intensities represent continuous-valued signals. Using phase-change materials, memristive photonics, and nanophotonic cavities, they’ve achieved reconfigurable, energy-efficient inference at multi-GHz speeds.

For example:

MIT’s photonic chip demonstrated real-time handwritten digit recognition using interference-based weight encoding.

EPFL’s work with phase-change materials (like GeSbTe) showed tunable optical weights that can be reprogrammed with laser pulses.

The evolution of optical neural networks (courtesy of Nature)

5. Toward Full-System Integration

Recent efforts aim to build complete optical systems, not just processors. Lightmatter’s Envise platform integrates optical cores, electronics, software compilers, and cloud APIs, making it accessible to AI developers.

Meanwhile, Intel and GlobalFoundries have announced joint research on monolithic photonic–electronic integration, indicating that major semiconductor players now see photonics as a key enabler for the post-Moore’s Law era.

6. Energy and Sustainability Impact

A standout advantage of photonic AI hardware lies in energy savings. Datacenters already consume nearly 3% of global electricity, with AI workloads expected to triple that by 2030. Photonic accelerators could curb this trend dramatically:

10–100× lower energy per MAC (multiply–accumulate) operation

Up to 1,000× reduction in heat generation versus equivalent GPUs

Compact form factors suitable for edge AI devices

These metrics make photonic acceleration not just a performance enhancement, but a sustainability imperative for the AI industry.

Advantages of Integrated Photonics for AI

Energy Efficiency: Photonic interconnects transmit data using photons, consuming far less power than electrons traveling through copper. This results in an order of magnitude reduction in data movement energy.

Massive Parallelism: Multiple wavelengths of light (via wavelength division multiplexing) can carry separate data channels simultaneously, multiplying throughput.

Ultrafast Computation: Optical matrix multiplication happens nearly at the speed of light, bypassing transistor switching delays.

Low Latency Interconnects: Optical fibers and on-chip waveguides can connect multiple cores or racks with negligible latency compared to electrical systems.

Scalability with CMOS Compatibility: Silicon photonics fabrication leverages existing CMOS foundries, making mass production feasible.

Challenges in Scaling Photonic AI Chips

While the potential is enormous, several technical barriers remain before large-scale deployment:

| Challenge | Impact | Current Approach |

|---|---|---|

| Integration of lasers and detectors on silicon | Limits full-chip photonic integration | Heterogeneous integration with InP or GaAs |

| Thermal stability | Phase drift affects interferometer accuracy | Active thermal control and calibration algorithms |

| Nonlinear activation implementation | Optical components are linear by nature | Hybrid photonic-electronic architectures |

| Error accumulation in analog computation | Reduces inference accuracy | Digital correction and hybrid feedback loops |

| Fabrication variability | Affects device yield | Machine learning-based calibration techniques |

Research continues toward fully photonic neural networks that minimize or eliminate electronic assistance.

Applications Beyond Datacenters

While AI acceleration in cloud servers is the most visible market, integrated photonics is also revolutionizing edge and sensing applications.

1. Edge AI and Embedded Vision

Compact photonic chips could bring inference capabilities to drones, autonomous vehicles, and mobile robots, enabling faster decision-making with lower power draw.

2. Optical Interconnects for Data Centers

Replacing copper links with optical interconnects reduces energy per bit transferred and improves scaling in exascale computing systems.

3. Biomedical Imaging and Diagnostics

AI-assisted imaging (OCT, photoacoustics, microscopy) benefits from real-time optical processing, allowing ultrafast feature extraction from massive datasets.

4. Quantum Machine Learning

Hybrid quantum–photonic systems use entangled photons for probabilistic inference, potentially accelerating quantum-enhanced neural networks.

The Road Ahead: Toward All-Optical AI

As researchers refine nonlinear optical components, on-chip laser integration, and error-tolerant architectures, the dream of fully optical AI computing draws closer. The convergence of photonics, AI, and quantum technologies could enable systems with unprecedented efficiency and intelligence.

Major chipmakers are already investing heavily in this direction. Intel’s recent Hummingbird optical interconnect prototype and Nvidia’s collaborations with Lightmatter suggest that the next generation of GPUs may feature optical co-processors, heralding a hybrid future where light and electrons work in tandem.

Conclusion

Integrated photonics for AI hardware acceleration represents the next frontier in AI hardware applications and growth. By merging the speed of light with the intelligence of algorithms, photonic AI accelerators offer a solution to one of computing’s greatest challenges: the growing gap between data processing demand and energy efficiency.

As manufacturing matures and hybrid designs become mainstream, the line between optical communication and optical computation will blur ushering in a new era of AI powered by photonics.

Further Reading

Shen, Y. et al., “Deep Learning with Coherent Nanophotonic Circuits,” Nature Photonics, 2017.

Miscuglio, M. & Sorger, V., “Photonic Tensor Cores for Optical Neural Networks,” Applied Physics Reviews, 2020.

Feldmann, J. et al., “Parallel Convolutional Processing Using an Integrated Photonic Tensor Core,” Nature, 2021.

Lightmatter: https://lightmatter.co

Celestial AI: https://celestial.ai